Stockfish NNUE

Home * Engines * Stockfish * NNUE

Stockfish NNUE,

a Stockfish branch by Hisayori Noda aka Nodchip, which uses Efficiently Updatable Neural Networks - stylized as ƎUИИ or reversed as NNUE - to replace its standard evaluation.

NNUE, introduced in 2018 by Yu Nasu [2],

were previously successfully applied in Shogi evaluation functions embedded in a Stockfish based search [3], such as YaneuraOu [4],

and Kristallweizen [5]. YaneuraOu's author Motohiro Isozaki made an unbelievable prediction that NNUE can help to increase Stockfish strength by around 100 points, almost one year before revealing [6] [7].

In 2019, Nodchip incorporated NNUE into Stockfish 10 - as a proof of concept, and with the intention to give something back to the Stockfish community [8].

After support and announcements by Henk Drost in May 2020 [9]

and subsequent enhancements, Stockfish NNUE was established and recognized. In summer 2020, with more people involved in testing and training,

the computer chess community bursts out enthusiastically due to its rapidly raising playing strength with different networks trained using a mixture of supervised and reinforcement learning methods. Despite the approximately halved search speed, Stockfish NNUE became stronger than its original [10].

In August 2020, Fishtest revealed Stockfish NNUE was stronger than the classical one at least 80 Elo [11]. In July 2020, the playing code of NNUE was put into the official Stockfish repository as a branch for further development and examination. In August that playing code merged to the master branch and become an official part of the engine. The training code still remained in Nodchip's repository [12] [13] for a while then replaced by PyTorch NNUE training[14][15]. On September 02, 2020, Stockfish 12 was released with a huge jump in playing strength due to the introduction of NNUE and further tuning [16].

Contents

NNUE Structure

The neural network consists of four layers. The input layer is heavily overparametrized, feeding in the board representation for all king placements per side [17].

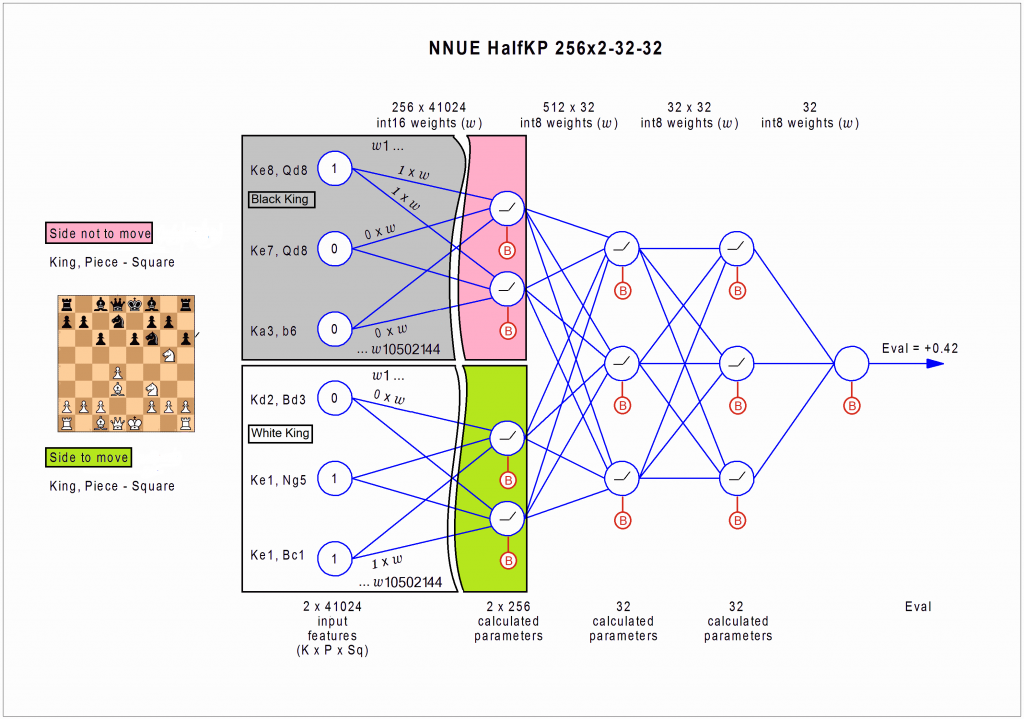

HalfKP

The so called HalfKP structure consists of two halves covering input layer and first hidden layer, each half of the input layer associated to one of the two kings, cross coupled with the side to move or not to move halves of the first hidden layer. For each either black or white king placement, the 10 none king pieces on their particular squares are the boolean {0,1} inputs, along with a relict from Shogi piece drop (BONA_PIECE_ZERO), 64 x (64 x 10 + 1) = 41,024 inputs for each half, which are multiplied by a 16-bit integer weight vector for 256 outputs per half, in total, 256 x 41,024 = 10,502,144 weights. As emphasized by Ronald de Man in a CCC forum discussion [18], the input weights are arranged in such a way, that color flipped king-piece configurations in both halves share the same index. However, and that seems also a relict from Shogi with its 180 degrees rotational 9x9 board symmetry, instead of vertical flipping (xor 56), rotation is applied (xor 63) [19].

The efficiency of NNUE is due to incremental update of the input layer outputs in make and unmake move, where only a tiny fraction of its neurons need to be considered in case of none king moves. The remaining three layers with 2x256x32, 32x32 and 32x1 weights are computational less expensive, hidden layers apply a ReLu activation [20] [21], best calculated using appropriate SIMD instructions performing fast 8-bit/16-bit integer vector arithmetic, like MMX, SSE2 or AVX2 on x86/x86-64, or, if available, AVX-512.

NNUE layers in action [22]

Explanation by Ronald de Man [23], who did the Stockfish NNUE port to CFish [24]:

The accumulator has a "white king" half and a "black king" half, where each half is a 256-element vector of 16-bit ints, which is equal to the sum of the weights of the "active" (pt, sq, ksq) features plus a 256-element vector of 16-bit biases.

The "transform" step of the NNUE evaluation forms a 512-element vector of 8-bit ints where the first half is formed from the 256-element vector of the side to move and the second half is formed from the 256-element vector of the other side. In this step the 16-bit elements are clipped/clamped to a value from 0 to 127. This is the output of the input layer.

This 512-element vector of 8-bit ints is then multiplied by a 32x512 matrix of 8-bit weights to get a 32-element vector of 32-bit ints, to which a vector of 32-bit biases is added. The sum vector is divided by 64 and clipped/clamped to a 32-element vector of 8-bit ints from 0 to 127. This is the output of the first hidden layer.

The resulting 32-element vector of 8-bit ints is multiplied by a 32x32 matrix of 8-bit weights to get a 32-element vector of 32-bit ints, to which another vector of 32-bit biases is added. These ints are again divided by 64 and clipped/clamped to 32 8-bit ints from 0 to 127. This is the output of the second hidden layer.

This 32-element vector of 8-bits ints is then multiplied by a 1x32 matrix of 8-bit weights (i.e. the inner product of two vectors is taken). This produces a 32-bit value to which a 32-bit bias is added. This gives the output of the output layer.

The output of the output layer is divided by FV_SCALE = 16 to produce the NNUE evaluation. SF's evaluation then take some further steps such as adding a Tempo bonus (even though the NNUE evaluation inherently already takes into account the side to move in the "transform" step) and scaling the evaluation towards zero as rule50_count() approaches 50 moves.

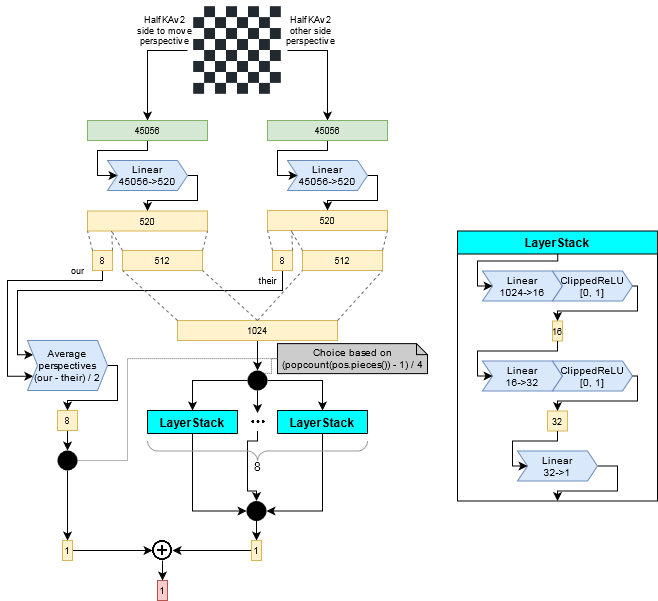

HalfKA

In subsequent Stockfish versions the network architecture was further improved by Tomasz Sobczyk et al.. The HalfKA architecture uses 12x64x64 = 45056 inputs for each of the 12 piece types times 64 squares for each of the 64 own king squares, times two, for both the side to move and other side perspective, further using the vertical flip instead of the HalfKP rotate. HalfKAv2 as applied in Stockfish 14 saves some space considering the king square redundancy using 11x64x64 = 45056 inputs per side, mapped to a 2x520 linear feature transformer [25] [26] [27], further feeding 8x2 outputs of this feature transformer directly to the output for better learning of unbalanced material configurations [28]. Another improvement was using eight 512x2->16->32->1 output sub-networks discriminated by (piece_count-1) div 4 in the 0 to 7 range [29].

HalfKAv2 architecture by Tomasz Sobczyk [30]

Network

Networks were built by volunteers, uploaded into Fishtest for testing. Networks with good test results are released officially on the Fishtest website [31] with average speed of 2 weeks per network [32]. After long discussing the best way to publish networks with Stockfish [33], the developing team decided to embed the default network into Stockfish binaries, making sure NNUE always works as well as bringing more convenience to users.

In late 2020, Gary Linscott started an implementation of the Stockfish NNUE training in PyTorch [34] [35] using GPU resources to efficiently train networks. Further, the collaboration with the Leela Chess Zero team in February 2021 [36] payed off, in providing billions of positions to train the new networks [37].

Hybrid

In August 2020 a new patch changed Stockfish NNUE into a hybrid engine: it uses NNUE evaluation only on quite balanced material positions, otherwise uses the classical one. It could speed up to 10% and gain 20 Elo [38]. At that point, NNUE helped to increase already around 100 Elo for Stockfish. In the same month, Stockfish changed the default mode of using evaluation functions from classic to hybrid one, the last step to completely accept NNUE. With Stockfish 16 release in 2023 the hand-crafted evaluation function was removed, a complete transition to NNUE based evaluation was made.

Strong Points

For Users

- Runs with CPU only, and doesn't require expensive video cards, as well the need for installing drivers and 3rd specific libraries. Thus it is much easier to install (compared to engines using deep convolutional neural networks, such as Leela Chess Zero) and suitable for almost all modern computers. Using a GPU is even not practical for that small net - host-device-latency aka. kernel-launch-overhead [39] [40] to a then underemployed GPU are not sufficient for the intended NPS range [41]

- Releases with only one network (via UCI options), that help to delete users' confusion from finding, selecting and setting up. The network is selected carefully from Fishtest

For Developers

- Requires small training sets. Some high score networks can be built with the effort of one or a few people within a few days. It doesn't require the massive computing from a supercomputer and/or from community

- Doesn’t require complicated systems such as a sophisticated client-server model to train networks. Just a single binary from Nodchip’ repo is enough to train

- The NNUE code is independent and can be separated easily from the rest and integrated to other engines [42]

Being attracted by new advantages as well as being encouraged by some impressive successes, many developers joined or continued to work. The Official Stockfish repository shows the numbers of commits, ideas increased significantly after merging NNUE.

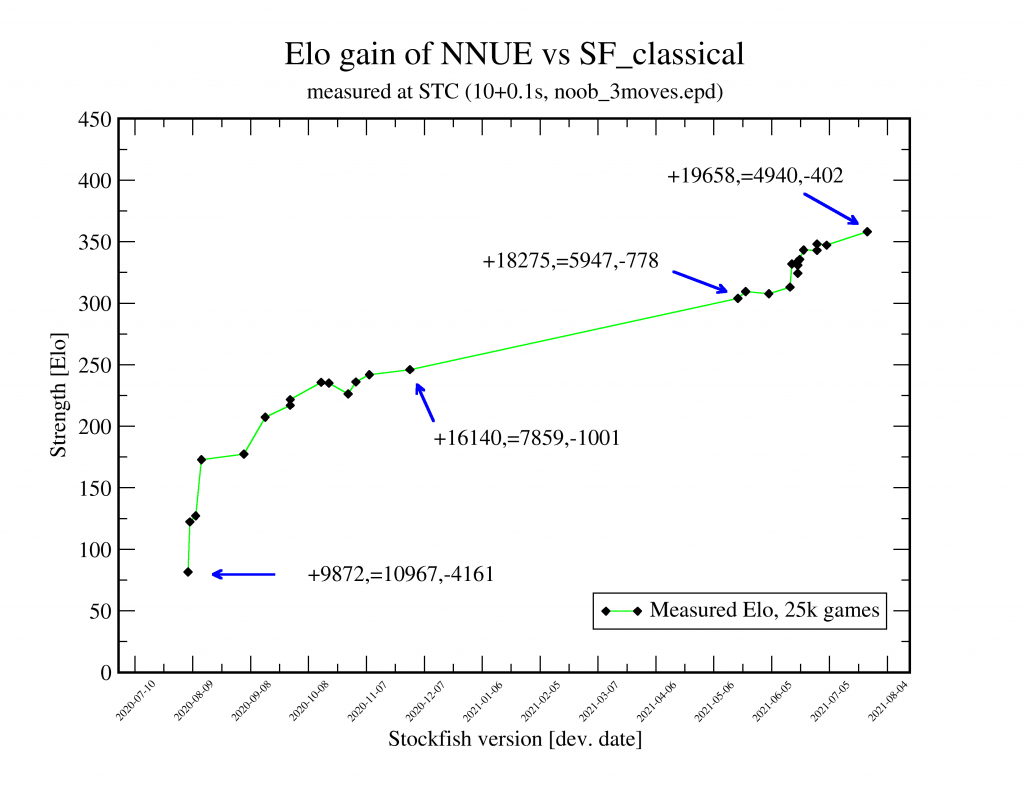

Elo gain

Joost VandeVondele has created a graph to show how Stockfish gains Elo with NNUE after a year:[43]

Suggestions

In reply to Unai Corzo, Motohiro Isozaki aka Yaneurao, suggested 3 techniques that applied successfully to Shogi and can be brought back to Stockfish NNUE and may improve it another 100 - 200 Elo [44] [45]:

- Optimizing all parameters together by stochastic optimization

- Switching between multi-evaluation functions, according to game phases

- Automatic generation of opening book on the fly

See also

Forum Posts

2020 ...

January ...

- The Stockfish of shogi by Larry Kaufman, CCC, January 07, 2020 » Shogi

- Stockfish NNUE by Henk Drost, CCC, May 31, 2020 » Stockfish

- Stockfish NN release (NNUE) by Henk Drost, CCC, May 31, 2020

- nnue-gui 1.0 released by Norman Schmidt, CCC, June 17, 2020

- stockfish-NNUE as grist for SF development? by Warren D. Smith, FishCooking, June 21, 2020

July

- How strong is Stockfish NNUE compared to Leela.. by OmenhoteppIV, LCZero Forum, July 13, 2020 » Leela Chess Zero

- Can the sardine! NNUE clobbers SF by Henk Drost, CCC, July 16, 2020

- End of an era? by Michel Van den Bergh, FishCooking, July 20, 2020

- Sergio Vieri second net is out by Sylwy, CCC, July 21, 2020

- NNUE accessible explanation by Martin Fierz, CCC, July 21, 2020

- Re: NNUE accessible explanation by Jonathan Rosenthal, CCC, July 23, 2020

- Re: NNUE accessible explanation by Jonathan Rosenthal, CCC, July 24, 2020

- Re: NNUE accessible explanation by Jonathan Rosenthal, CCC, August 03, 2020

- Stockfisch NNUE macOS binary requested by Steppenwolf, CCC, July 25, 2020

- Stockfish NNUE by Lion, CCC, July 25, 2020

- 7000 games testrun of SFnnue sv200724_0123 finished by Stefan Pohl, FishCooking, July 26, 2020

- SF-NNUE going forward... by Zenmastur, CCC, July 27, 2020

- New sf+nnue play-only compiles by Norman Schmidt, CCC, July 27, 2020

- Stockfish+NNUEsv +80 Elo vs Stockfish 17jul !! by Kris, Rybka Forum, July 28, 2020

- LC0 vs. NNUE - some tech details... by Srdja Matovic, CCC, July 29, 2020 » Lc0

- Stockfish NNUE and testsuites by Jouni Uski, CCC, July 29, 2020

- Stockfish NNue [download ] by Ed Schröder, CCC, July 30, 2020

August

- Repository for Stockfish+NNUE Android Builds by AdminX, CCC, August 02, 2020

- SF NNUE Problem by Stephen Ham, CCC, August 03, 2020

- Re: NNUE accessible explanation by Jonathan Rosenthal, CCC, August 03, 2020 » NNUE accessible explanation

- [NNUE] Worker update on fishtest by Joost VandeVondele, FishCooking, August 03, 2020

- this will be the merge of a lifetime : SF 80 Elo+ by MikeB, CCC, August 04, 2020

- Re: this will be the merge of a lifetime : SF 80 Elo+ by Henk Drost, CCC, August 04, 2020

- You can now look inside NNUE and look at its Per square value estimation by Henk Drost, CCC, August 04, 2020

- Is this SF NN almost like 20 MB book? by Jouni Uski, CCC, August 04, 2020

- NNUE evaluation merged in master by Joost VandeVondele, FishCooking, August 06, 2020

- What happens with my hyperthreading? by Kai Laskos, CCC, August 06, 2020 » Thread

- Stockfish NNUE style by Rowen, CCC, August 08, 2020

- SF NNUE training questions by Jouni Uski, CCC, August 10, 2020

- Progress of Stockfish in 6 days by Kai Laskos, CCC, August 12, 2020

- Neural Networks weights type by Fabio Gobbato, CCC, August 13, 2020

- Don't understand NNUE by Lucasart, CCC, August 14, 2020

- SF+NNUE reach the ceiling? by Corres, CCC, August 27, 2020

- The most stupid idea by the Stockfish Team by Damir, CCC, August 30, 2020

September

- Stockfish 12 by Joost VandeVondele, FishCooking, September 02, 2020

- Stockfish 12 is released today! by Nay Lin Tun, CCC, September 02, 2020

- Stockfish 12 has arrived! by daniel71, CCC, September 02, 2020

- AVX2 optimized SF+NNUE and processor temperature by corres, CCC, September 05, 2020 » AVX2

October

- BONA_PIECE_ZERO by elcabesa, CCC, October 04, 2020

- SF NNUE/Classical by Fauzi, FishCooking, October 05, 2020

- How to scale stockfish NNUE score? by Maksim Korzh, CCC, October 17, 2020 » Stockfish NNUE, Scorpio NNUE

- Embedding Stockfish NNUE to ANY CHESS ENGINE: YouTube series by Maksim Korzh, CCC, October 17, 2020 » BBC NNUE

- NNUE Question - King Placements by Andrew Grant, CCC, October 23, 2020 » NNUE Structure

- Re: NNUE Question - King Placements by syzygy, CCC, October 23, 2020

- Re: NNUE Question - King Placements by syzygy, CCC, October 23, 2020

2021 ...

- Shouldn't positional attributes drive SF's NNUE input features (rather than king position)? by Nick Pelling, FishCooking, January 10, 2021

- stockfish NNUE question by Jon Dart, CCC, January 21, 2021

- 256 in NNUE? by Ted Wong, CCC, January 28, 2021

- Fat Fritz 2 by Jouni Uski, CCC, February 09, 2021 » Fat Fritz 2.0

- NNUE Research Project by Ed Schröder, CCC, March 10, 2021 » NNUE

- NNUE ranking by Jim Logan, CCC, March 12, 2021

- Stockfish with new NNUE architecture and bigger net released by Stefan Pohl, CCC, May 19, 2021 [46]

- Why NNUE trainer requires an online qsearch on each training position? by nkg114mc, CCC, January 01, 2022

External Links

Basics

- Stockfish NNUE – The Complete Guide, June 19, 2020 (Japanese and English)

- 3 technologies in shogi AI that could be used for chess AI by Motohiro Isozaki, August 21, 2020

- Stockfish NNUE Wiki

- NNUE merge · Issue #2823 · official-stockfish/Stockfish · GitHub by Joost VandeVondele, July 25, 2020 [47]

- Stockfish Evaluation Guide [48]

- NNUE Guide (nnue-pytorch/nnue.md at master · glinscott/nnue-pytorch · GitHub) hosted by Gary Linscott

- One year of NNUE.... · official-stockfish/Stockfish · GitHub by Joost VandeVondele, July 26, 2021

Source

- GitHub - Official-stockfish

- GitHub - nodchip/Stockfish: UCI chess engine by Nodchip

- GitHub - vondele/Stockfish at nnue-player-wip by Joost VandeVondele

- GitHub - tttak/Stockfish: UCI chess engine

- GitHub - joergoster/Stockfish-NNUE: UCI Chess engine Stockfish with an Efficiently Updatable Neural-Network-based evaluation function hosted by Jörg Oster

- GitHub - FireFather/sf-nnue: Stockfish NNUE (efficiently updateable neural network) by Norman Schmidt

- GitHub - FireFather/nnue-gui: basic windows application for using nodchip's stockfish-nnue software by Norman Schmidt

Networks

Rating

- Regression Tests

- Stockfish 14 64-bit 8CPU in CCRL Blitz

- Stockfish 12 64-bit in CCRL Blitz

- Stockfish+NNUE 150720 64-bit 4CPU in CCRL Blitz

Misc

- Stockfish from Wikipedia

- Nue from Wikipedia

- Senri Kawaguchi - The Quarantuned Music Festival, May 2020, YouTube Video

References

- ↑ Stockfish NNUE Logo from GitHub - nodchip/Stockfish: UCI chess engine by Nodchip

- ↑ Yu Nasu (2018). ƎUИИ Efficiently Updatable Neural-Network based Evaluation Functions for Computer Shogi. Ziosoft Computer Shogi Club, pdf (Japanese with English abstract) GitHub - asdfjkl/nnue translation

- ↑ The Stockfish of shogi by Larry Kaufman, CCC, January 07, 2020

- ↑ GitHub - yaneurao/YaneuraOu: YaneuraOu is the World's Strongest Shogi engine(AI player), WCSC29 1st winner, educational and USI compliant engine

- ↑ GitHub - Tama4649/Kristallweizen: 第29回世界コンピュータ将棋選手権 準優勝のKristallweizenです。

- ↑ 将棋ソフト開発者がStockfishに貢献する日 The day when shogi software developers contribute to Stockfish by Motohiro Isozaki, June 2019

- ↑ shogi engine developer claims he can make Stockfish stronger, Reddit, August 2019

- ↑ Stockfish NNUE – The Complete Guide, June 19, 2020 (Japanese and English)

- ↑ Stockfish NN release (NNUE) by Henk Drost, CCC, May 31, 2020

- ↑ Can the sardine! NNUE clobbers SF by Henk Drost, CCC, July 16, 2020

- ↑ Introducing NNUE Evaluation, August 06, 2020

- ↑ NNUE merge · Issue #2823 · official-stockfish/Stockfish · GitHub by Joost VandeVondele, July 25, 2020

- ↑ GitHub - nodchip/Stockfish: UCI chess engine by Nodchip

- ↑ Pytorch NNUE training by Gary Linscott, CCC, November 08, 2020

- ↑ Why NNUE trainer requires an online qsearch on each training position? by nkg114mc, CCC, January 01, 2022

- ↑ Stockfish 12, The Stockfish Team, Stockfish Blog, September 02, 2020

- ↑ Re: NNUE accessible explanation by Jonathan Rosenthal, CCC, July 23, 2020

- ↑ Re: NNUE Question - King Placements by syzygy, CCC, October 23, 2020

- ↑ NNUE eval rotate vs mirror · Issue #3021 · official-stockfish/Stockfish · GitHub by Terje Kirstihagen, August 17, 2020

- ↑ Stockfish/halfkp_256x2-32-32.h at master · official-stockfish/Stockfish · GitHub

- ↑ Stockfish/clipped_relu.h at master · official-stockfish/Stockfish · GitHub

- ↑ Image courtesy Roman Zhukov, revised version of the image posted in Re: Stockfish NN release (NNUE) by Roman Zhukov, CCC, June 17, 2020, labels corrected October 23, 2020, see Re: NNUE Question - King Placements by Andrew Grant, CCC, October 23, 2020

- ↑ Re: NNUE Question - King Placements by syzygy, CCC, October 23, 2020

- ↑ Cfish/nnue.c at master · syzygy1/Cfish · GitHub

- ↑ New NNUE architecture and net · official-stockfish/Stockfish@e8d64af · GitHub

- ↑ Update default net to nn-8a08400ed089.nnue by Sopel97 · Pull Request #3474 · official-stockfish/Stockfish · GitHub

- ↑ HalfKAv2 feature set | nnue-pytorch/nnue.md at master · glinscott/nnue-pytorch · GitHub

- ↑ A part of the feature transformer directly forwarded to the output | nnue-pytorch/nnue.md at master · glinscott/nnue-pytorch · GitHub

- ↑ Multiple PSQT outputs and multiple subnetworks | nnue-pytorch/nnue.md at master · glinscott/nnue-pytorch · GitHub

- ↑ HalfKAv2.png Image courtesy by Tomasz Sobczyk

- ↑ Neural Net download and statistics

- ↑ One year of NNUE.... · official-stockfish/Stockfish · GitHub by Joost VandeVondele, July 26, 2021

- ↑ Improve dealing with the default net? Issue ##3030 · official-stockfish/Stockfish · GitHub by Joost VandeVondele, August 19, 2020

- ↑ Pytorch NNUE training by Gary Linscott, CCC, November 08, 2020

- ↑ GitHub - glinscott/nnue-pytorch: NNUE (Chess evaluation) trainer in Pytorch

- ↑ Stockfish 13 by Joost VandeVondele, FishCooking, February 19, 2021

- ↑ Stockfish 14, The Stockfish Team, July 02, 2021

- ↑ NNUE evaluation threshold by MJZ1977 · Pull Request #2916 · official-stockfish/Stockfish · GitHub, August 06, 2020

- ↑ AB search with NN on GPU... by Srdja Matovic, CCC, August 13, 2020 » GPU

- ↑ kernel launch latency - CUDA / CUDA Programming and Performance - NVIDIA Developer Forums by LukeCuda, June 18, 2018

- ↑ stockfish with graphics card by h1a8, CCC, August 06, 2020

- ↑ NNUE Engines

- ↑ One year of NNUE.... · official-stockfish/Stockfish · GitHub by Joost VandeVondele, July 26, 2021

- ↑ 3 technologies in shogi AI that could be used for chess AI, Motohiro Isozaki, August 2020

- ↑ GitHub - NNUE ideas and discussion (post-merge). #2915, August 2020

- ↑ Update default net to nn-8a08400ed089.nnue by Sopel97 · Pull Request #3474 · official-stockfish/Stockfish · GitHub by Tomasz Sobczyk

- ↑ An info by Sylwy, CCC, July 25, 2020

- ↑ You can now look inside NNUE and look at its Per square value estimation by Henk Drost, CCC, August 04, 2020